Kurcuma: a Kitchen Utensil Recognition Collection for Unsupervised Domain Adaptation

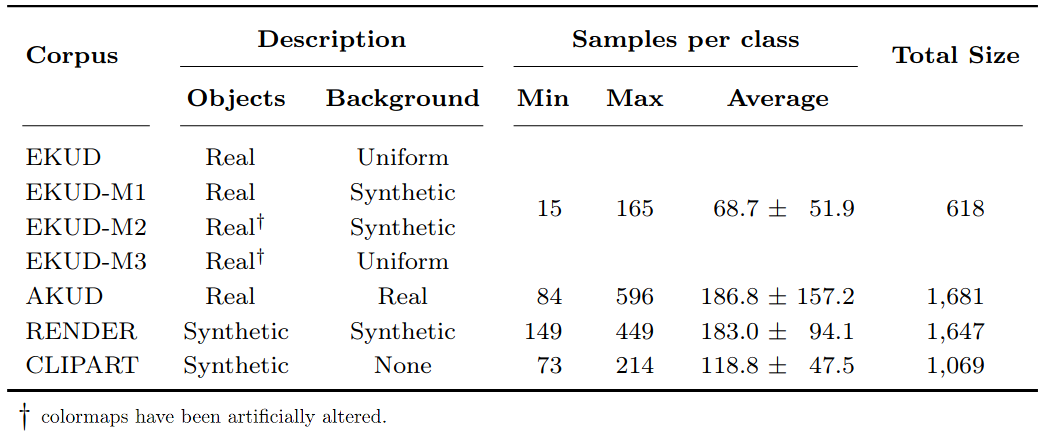

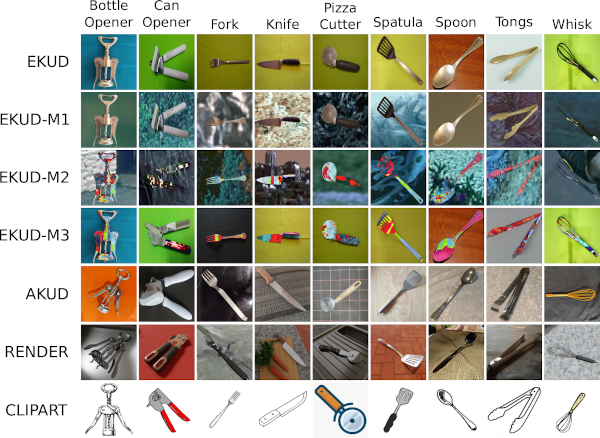

The collection comprises 7 individual kitchen utensil corpora (four of them built by the authors) with a varying number of color images with a spatial resolution of 300x300 pixels distributed in the same 9 classes for all corpora. These sets, which represent the actual data domains of the Kurcuma corpus, essentially differ in that the objects and background in each of the cases may be deemed as being either real or synthetic. A comprehensive description of these seven corpora is now facilitated:

- Edinburgh Kitchen Utensil Database (EKUD): This corpus is a modification of https://homepages.inf.ed.ac.uk/rbf/UTENSILS/, which was originally created to train domestic assistance robots and comprised 897 real-world pictures of utensils with uniform backgrounds distributed in 12 kitchen utensils with close to 75 images per class. Note that, when compiled for the Kurcuma collection, a data curation process based on merging the most confusing classes, removing under-represented categories, and discarding low-quality examples was applied, hence resulting in the 9 labels of the presented assortment with a total of 618 images.

- EKUD Real Color (EKUD-M1): Corpus generated by the authors combining images of EKUD with patches from BSDS5000, following an approach similar to that used to develop the MNIST-M collection. In this case, only the background of the EKUD images was modified, keeping the original color of the objects.

- EKUD Not Real Color (EKUD-M2): Extension to EKUD-M1 in which the color of the objects was altered by being mixed with the color of the patches used for the background.

- EKUD Not Real Color with Real Background (EKUD-M3): Third variation proposed by the authors in which the distortion process devised for the EKUD-M2 case is directly applied to the initial EKUD corpus.

- Alicante Kitchen Utensil Database (AKUD): Collection developed by the authors by manually taking 1,480 photographs of the 9 kitchen utensil categories considered for the Kurcuma collection. It depicts real objects in different real-world backgrounds covering a wide range of lighting conditions and perspectives.

- RENDER: Corpus generated by the authors by rendering synthetic images using different base public models of utensils and backgrounds from the Internet with the Blender tool. To ensure variability in the data, these images were created considering different camera perspectives and illumination cases as well as a varied range of focal lengths of the virtual camera.

- CLIPART: Set of draw-like images gathered by the authors from the Internet representing each of the classes in the collection. No background is attached to the samples, showing the figure representation over a plain white background.

The following table inclues a summary of the main features of this collection of corpora:

Some examples of each dataset are shown in the figure below:

RELATED PUBLICATION

Please, if you use these datasets or part of them, cite the following publications:

@article{Rosello2023,

author = {Adrian Rosello, Jose J. Valero-Mas, Antonio Javier Gallego, Javier Sáez-Pérez, and Jorge Calvo-Zaragoza},

title = {Kurcuma: a Kitchen Utensil Recognition Collection for Unsupervised Domain Adaptation},

journal = {Pattern Analysis and Applications},

year = {2023}

}

@inproceedings{Saez2022,

author = {Javier Sáez-Pérez, Antonio Javier Gallego, Jose J. Valero-Mas, and Jorge Calvo-Zaragoza},

title = {Domain Adaptation in Robotics: A Study Case on Kitchen Utensil Recognition},

booktitle = {Iberian Conference on Pattern Recognition and Image Analysis},

year = {2022}

}

DOWNLOAD

To download this dataset use the following link:

This work was supported by the I+D+i project TED2021-132103A-I00 (DOREMI), funded by MCIN/AEI/10.13039/501100011033. Some of the computing resources were provided by the Generalitat Valenciana and the European Union through the FEDER funding program (IDIFEDER/2020/003). The third author is supported by grant APOSTD/2020/256 from "Programa I+D+i de la Generalitat Valenciana".